Apache Hadoop is an open-source software framework that supports data-intensive distributed applications, licensed under the Apache v2 license. It supports the running of applications on large clusters of commodity hardware. Hadoop was derived from Google's MapReduce and Google File System (GFS) papers.

Current Installation of hadoop on ubuntu is pseudo-distributed, single-node Hadoop cluster backed by the Hadoop Distributed File System.

Prerequisites:

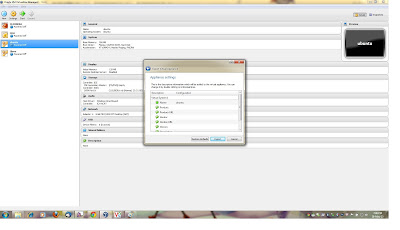

Install Oracle Virtual Box 4.0 and download ubuntu iso image

installation of Java 1.6. or higher

Run following commands in ubuntu terminal

$ sudo apt-get install python-software-properties

$ sudo add-apt-repository ppa:ferramroberto/java

# Update the source list

$ sudo apt-get update

# Install Open JDK 6

$ sudo apt-get install openjdk-6-jdk

The full JDK which will be placed in /usr/lib/jvm/java-6-openjdk-amd64/

Modify Hostname from ubuntu to master

sudo vi /etc/hostname

then restart ubuntu VM

Find ipaddress of ubuntu using ifconfig command

Enter the ipaddress in hosts folder

sudo vi /etc/hosts

ex: master 10.128.20.35

user@ubuntu:~# java -version

java version "1.6.0_20"

Java(TM) SE Runtime Environment (build 1.6.0_20-b02)

Java HotSpot(TM) Client VM (build 16.3-b01, mixed mode, sharing)

Adding a dedicated Hadoop system user

We will use a dedicated Hadoop user account for running Hadoop. While that’s not required it is recommended because it helps to separate the Hadoop installation from other software applications and user accounts running on the same machine (think: security, permissions, backups, etc).

HADOOP LOCATION : /usr/local/hadoop

$ sudo addgroup hadoop

$ sudo adduser --ingroup hadoop hduser

This will add the user hduser and the group hadoop to your local machine.

Configuring SSH

user@ubuntu:~$ su - hduser

hduser@ubuntu:~$ ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hduser/.ssh/id_rsa):

Created directory '/home/hduser/.ssh'.

Your identification has been saved in /home/hduser/.ssh/id_rsa.

Your public key has been saved in /home/hduser/.ssh/id_rsa.pub.

The key fingerprint is:

9b:82:ea:58:b4:e0:35:d7:ff:19:66:a6:ef:ae:0e:d2 hduser@ubuntu

The key's randomart image is:

[...snipp...]

hduser@ubuntu:~$

Second, you have to enable SSH access to your local machine with this newly created key.

hduser@ubuntu:~$ cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

hduser@ubuntu:~$ ssh localhost

The authenticity of host 'localhost (::1)' can't be established.

RSA key fingerprint is d7:87:25:47:ae:02:00:eb:1d:75:4f:bb:44:f9:36:26.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'localhost' (RSA) to the list of known hosts.

Linux ubuntu 2.6.32-22-generic #33-Ubuntu SMP Wed Apr 28 13:27:30 UTC 2010 i686 GNU/Linux

Ubuntu 10.04 LTS

[...snipp...]

hduser@ubuntu:~$

Hadoop Installation

$ cd /usr/local

$ sudo tar xzf hadoop-1.0.3.tar.gz

$ sudo mv hadoop-1.0.3 hadoop

$ sudo chown -R hduser:hadoop hadoop

Add follwing lines in .bashrc

# Set Hadoop-related environment variables

export HADOOP_HOME=/usr/local/hadoop

# Set JAVA_HOME (we will also configure JAVA_HOME directly for Hadoop later on)

export JAVA_HOME=/usr/lib/jvm/java-6-sun

# Add Hadoop bin/ directory to PATH

export PATH=$PATH:$HADOOP_HOME/bin

Configuration

/usr/local/hadoop/conf/hadoop-env.sh

# The java implementation to use. Required.

export JAVA_HOME=/usr/lib/jvm/java-6-sun

$ sudo mkdir -p /app/hadoop/tmp

$ sudo chown hduser:hadoop /app/hadoop/tmp

# ...and if you want to tighten up security, chmod from 755 to 750...

$ sudo chmod 750 /app/hadoop/tmp

hadoop.tmp.dir

/app/hadoop/tmp

A base for other temporary directories.

scheme and authority determine the FileSystem implementation. The

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.

at. If "local", then jobs are run in-process as a single map

and reduce task.

at. If "local", then jobs are run in-process as a single map

and reduce task.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.